Flowlab is a mobile AR app that allows users to create interactive sound sculptures by launching glowing orbs that react to audio from different frequency bands and blend smoothly together to create one-of-a-kind visual effects.

The experience can be used anywhere as a soothing meditative device, but the real magic happens in live music settings where users can create their own visual performance that syncs to the music in real time.

The app was built as my submission to the Loak x Niantic AR-CADE Challenge hackathon, and was selected as a winner for Best Visuals.

My submission video should give you a feel for the type of experience Flowlab has to offer. If you want to learn more, you can view the challenge on Loak's website here.

Although I've created a number of successful effects in my explorations into audio-reactive AR, I wanted to focus on interactivity for this experience. Always seeking to push the boundaries of my knowledge, I decided to create an interactive raymarching shader that used real-time physics to drive movement based on user input and collisions.

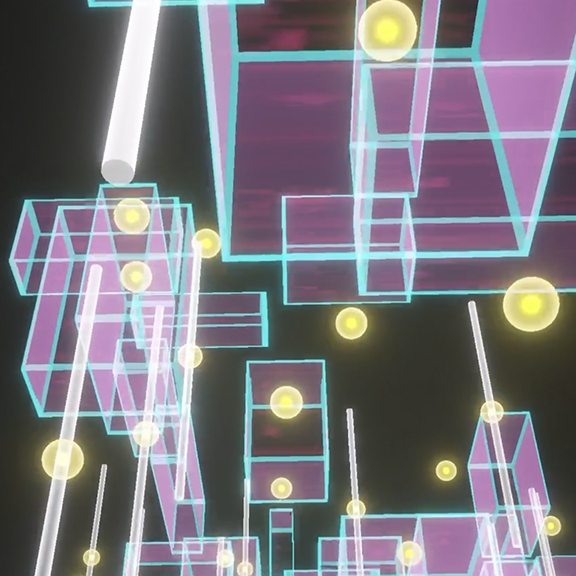

In create mode, users select a frequency band and tap the screen to launch a new orb for that band. Each frequency is represented by a different color, and the orbs glow based on the intensity of the devices microphone input.

Using the power of raymarching, these forms and colors blend seamlessly together to create a unique interactive effect.

Switching over to play mode, users can overlay additional, pixel-like geometries and trigger animations through screen gestures.

The main effect was created using a custom raymarching shader in Unity. Eight spheres with colliders use real-time physics to drive their movement, and their positional data is sent to the shader to update the raymarched object positions.

GPU instancing allowed me to apply the same material to each sphere without the downside of extra draw calls. Each sphere acts as a lens into the raymarch shader, which allows the raymarched objects to behave similarly to regular game objects.

The device's microphone data is then split into bands and selectively sent to each object in the shader to achieve the audio-reactive, glowing effect in different colors for each band.

Flowlab is just the next step in working towards my goals in this space. Although possibly a while out, the ultimate vision for this project is to become a fully-immersive, location-based experience at concerts and music festivals where users can be more fully immersed on AR headsets.

The technology and hardware price point may not be there yet, but a good first step I'm working on is shared AR support, so multiple users can view the same experience.